Hi,

I recently pruchased an RPi5 and an Argon ONE V3 M.2 NVMe PCIe case to be used as a home automation server. Installation and boot from the NVMe went smoothly. After 2 days of uptime, the server suddenly became unresponsive. After rebooting, I found several kernel messages saying “nvme cntroller is dow, will reset” (see example below)

Apr 09 01:00:07 iotserver kernel: nvme nvme0: controller is down; will reset: CSTS=0xffffffff, PCI_STATUS=0x10

Apr 09 01:00:07 iotserver kernel: nvme nvme0: Does your device have a faulty power saving mode enabled?

Apr 09 01:00:07 iotserver kernel: nvme nvme0: Try "nvme_core.default_ps_max_latency_us=0 pcie_aspm=off" and report a bug

Apr 09 01:00:07 iotserver kernel: nvme 0000:01:00.0: enabling device (0000 -> 0002)

Apr 09 01:00:07 iotserver kernel: nvme nvme0: 4/0/0 default/read/poll queues

Apr 09 01:04:19 iotserver kernel: nvme nvme0: controller is down; will reset: CSTS=0xffffffff, PCI_STATUS=0x10

Apr 09 01:04:19 iotserver kernel: nvme nvme0: Does your device have a faulty power saving mode enabled?

Apr 09 01:04:19 iotserver kernel: nvme nvme0: Try "nvme_core.default_ps_max_latency_us=0 pcie_aspm=off" and report a bug

Apr 09 01:04:19 iotserver kernel: nvme 0000:01:00.0: enabling device (0000 -> 0002)

Apr 09 01:04:19 iotserver kernel: nvme nvme0: 4/0/0 default/read/poll queues

Hooking the server up to a screen, I was able to capture the error messages printed on screen, but not captured in the logs on a later crash due to the file system being unmounted (hand-typed from a photo, may contain some “spelling errors”):

[118555.548795] EXT4-fs error (device nvme0n1p2) in ext4_reserve_inode_write:5764: Journal nas aborted

[118555.548807] EXT4-fs error (device nvme0n1p2) in ext4_reserve_inode_write:5764: Journal nas aborted

[118555.550145] EXT4-fs error (device nvme0n1p2): ext4_dirty_inode:5968: inode #120009: comm systemd-journal: mark_inode_dirty error

[118555.551476] EXT4-fs error (device nvme0n1p2): ext4_dirty_inode:5968: inode #545366: comm python3: mark_inode_dirty error

[118555.552829] EXT4-fs error (device nvme0n1p2) in ext4_dirty_inode:5969: Journal has aborted

[118555.552927] EXT4-fs error (device nvme0n1p2): ext4_journal_check_starting:84: comm systemd-journal: Detected aborted journal

[118555.617595] EXT4-fs (nvme0n1p2): Remounting filesystem read-only

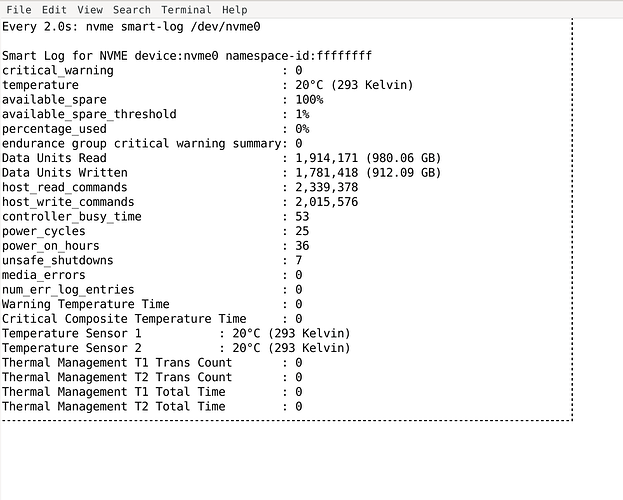

I use the “official” RPi 5 power adapter. The NVMe card is a Kingston NV2 M.2 500GB. I did follow the instructions on setting up the eeprom config and installing the Argon scripts.

Sometimes the error happens after a few days, other times it will happen within 10 seconds of booting. The error does not seem related to the temperature of the device - it has happened within 30 seconds of booting after a 30 minute period of being turned off.

I have tried the recommended “nvme_core.default_ps_max_latency_us=0 pcie_aspm=off” kernel parameters without success.

Having spent a disordinate amount of time troubleshooting the device, I’m seriously regretting not paying €50 more and getting a NUC at this point ![]()

I hope someone here might be able to give me some advice before it all goes into the trash.

Thank you so much in advance!